Platform

21 May 2025 Security Tips Florent Montel

Secured Pentest: the Patrowl referential

You like this content ?

Share it on the networks

Anything related to testing the security of a web application — whether it’s vulnerability scans, fuzzing, controlled brute force, or automated penetration tests — is often seen as risky. And that’s understandable: no one wants their application to crash because of an improperly managed test.

At Patrowl, we take this concern very seriously. That’s why we’ve established a strict internal benchmark to ensure our testing always remains safe. Every new test is validated according to a simple rule: it must never generate more traffic than an old smartphone, like a Samsung Galaxy S6 Edge.

Why use this model as an example ?

Because it represents a very basic level of power and traffic compared to today’s standards. It featured an Exynos 7420 processor (8 cores, up to 2.1 GHz) and 3 GB of RAM. At the time, it was high-end. Today, it’s far surpassed by any cheap smartphone.

In other words, if your application crashes under a load equivalent to what an S6 Edge generates, it’s in constant danger... A child, a script kiddie, or a poorly designed weather app could just as easily bring it down.

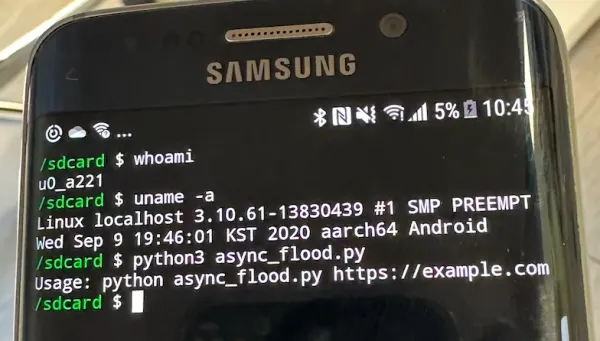

To simulate this behavior, we simply created a h@x0r Python script that sends asynchronous web requests to a target. We run this script from a basic Termux app (no root required on the phone) on our Samsung device (script included in the appendix).

Security testing: the steps in our methodology

For our tests, we set up a lab with some of the worst examples of website hosting environments. To simulate unscrupulous hosting providers, we started by creating a very simple AWS machine template with the following specs:

Latest version of

MariaDBdatabase listening onlocalhostAn

Apache2service listening on port 80 (no HTTPS, to keep things simple)A WordPress instance with a few basic plugins:

BackWPup

Contact Form 7

Google for WooCommerce

WooCommerce

WPCode Lite

⚠️ These plugins were not chosen randomly. Some are known to trigger false positives in many scanners, while others can lead to serious security issues if misconfigured or improperly used.

However, it wouldn’t be fair to make a public comparison between what Patrowl can detect versus other tools on this sample. It’s far too easy to manipulate tools to produce results that suit our narrative, resulting in biased analyses that could mislead our users.

That said, we do use this template internally for training purposes to clearly demonstrate the effectiveness of our product.

Here is our ready-to-use website:

It is important to note that we did not change any configurations of the installed services. The goal was just to simulate some of the worst-case hosting scenarios and to highlight the inherent risks of exposing such services directly on the Internet.

We then duplicated these setups across five typical AWS instance types:

🎤 Micro → t2.micro:

1 vCPU, 1 GB RAM, 20 GB SSD Storage👕 Small → t2.small:

1 vCPU, 2 GB RAM, 20 GB SSD Storage🥈 Medium → t2.medium:

2 vCPU, 4 GB RAM, 20 GB SSD Storage💨 Large → t2.large:

2 vCPU, 8 GB RAM, 20 GB SSD Storage

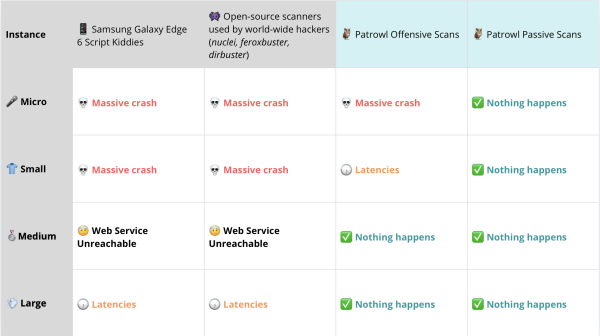

We then ran four distinct sets of scans to cover and analyze common use cases:

📱Samsung Galaxy Edge 6 Script Kiddies → The Python script async_flood.py (attached) launched from the Samsung device against the target

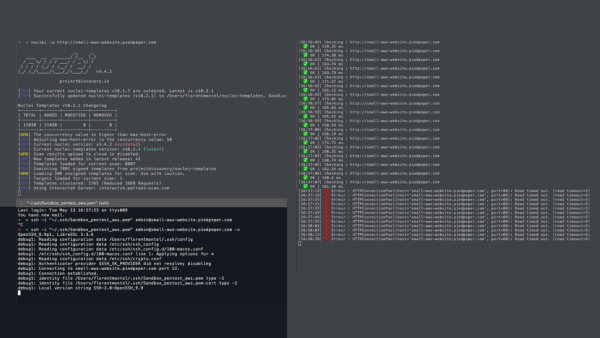

👾 Open-source scanners used by hackers worldwide (nuclei, feroxbuster, dirbuster) → nuclei/feroxbuster/dirbuster run with default configurations on a standard machine (a 2020 Mac)

🦉 Patrowl Offensive Scans → The full suite of offensive scans from Patrowl launched from our iso-prod platform

🦉 Patrowl Passive Scans → The full suite of passive scans from Patrowl launched from our iso-prod platform

Zoom on the results

To analyze the behavior of each machine, we deployed a simple Python monitoring script that measures response times for each web service and tracks causes and durations of any potential outages.

This allowed us to identify four types of behavior across the servers during the scans:

💀 Massive crash: the server crashes immediately and no service responds (including SSH). AWS admin intervention is required for a soft reboot.

🤕 Web Service Unreachable: the web service can’t handle incoming requests during the scan, but the server remains up. The site is inaccessible only during the scan and returns to normal afterwards.

🕠 Latencies: the website experiences significant response delays during the scan. The site remains accessible but is noticeably slow.

✅ Nothing happens: no disruption detected during the scan for any users.

To be honest, the results impressed us quite a bit.

It’s surprisingly easy to bring down a poorly configured or underpowered server: sometimes, a simple command line is enough to completely destabilize it. 💀 Massive crash cases are far more common than people realize.

As expected, scans performed with Patrowl are much gentler on applications than a basic Python script run from an old 2015 phone. The only machine that didn’t hold up was the 🎤 micro instance. That said, even a few manual refreshes (F5) from a browser can shake this service — such a fragile server simply has no place online.

For the others — small, medium, or large — we found that Patrowl has very limited impact on the tested services, despite the modest configurations (we’re talking entry-level AWS instances here). These machines would stand far less chance against the classic tools commonly used in penetration tests or Bug Bounty programs, where the risk of crashes is much higher.

So, Patrowl’s promise of being less aggressive than a Samsung Galaxy S6 Edge is definitely kept !

Hackers will not ask, and they won't care

Of course, scanning with Patrowl allow you to minimize your risk of potential crashes during controlled scans, but be sure that worldwide hacker will not take care of your servers as carefully as professional offensive providers do.

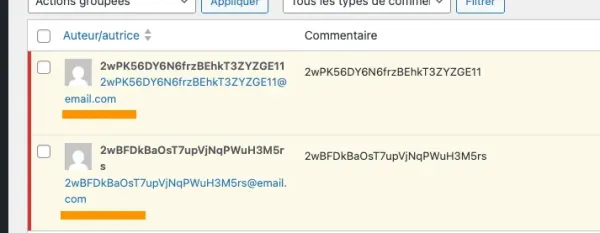

We analyzed also what happened on our exposed server without our scans. We keep a cleaned 🥈 Medium instance up for few days to see what is happening.

This website is normally unknown from the rest of internet: no referencing, no https/certificate (then no crt.sh entries), cannot be detected by classic EASM tools. It is therefore one of the least detectable website on the internet, and yet…

Our AWS micro and Small instance crashed many times during our analyses but not because of our scans (many unknown scans from known and unknown providers). We need to reboot the AWS so many times.

We recorded around 100k web requests coming from more than 1500unknown IPs (AWS, Scaleway, Netiface?, Contabo, Yandex)

Some unexpected comments in one of our article from unknown IPs (its a well-known intrusive nuclei template, once again, they did not ask)

This is what happens to an interface that is barely visible on the internet in less than a week. Now imagine what your more visible websites go through every single day.

Be prepared

Exposing web services online is no trivial matter. You must be prepared for them to be constantly probed by the entire internet, as we demonstrated just before.

From our perspective and our analyses, any exposed web application should, at a minimum, be able to withstand a scan from a basic open-source web scan such as Nuclei run with default settings from a standard workstation (a test that is quite easy to perform, by the way).

Our Patrowl Galaxy Edge 6 benchmark uses much lower volumes compared to a Nuclei scan, but we believe it represents a strong use case for testing whether your infrastructure can handle the kind of abuse it’s likely to face on internet (misconfigured Nuclei launched by a script-kiddies)

Of course, in real word business life, many of exposed web servers are protected by Load-Balancer, Reverse-Proxy, WAF or CDN which of course are able to handle simple Nuclei Scans (a basic F5 instance is for instance able to handle 500k simultaneous HTTP requests). It is one of the best way (not the cheapest) to protect your website.

But sometimes, it is not to possible to protect your server with such kind of expensive equipment or configuration, most of time, because it is hosted by a third-party provider which does not offer this kind of service, or because you are hosting it for yourself and don’t want to spend $ in such services.

Hosting 101

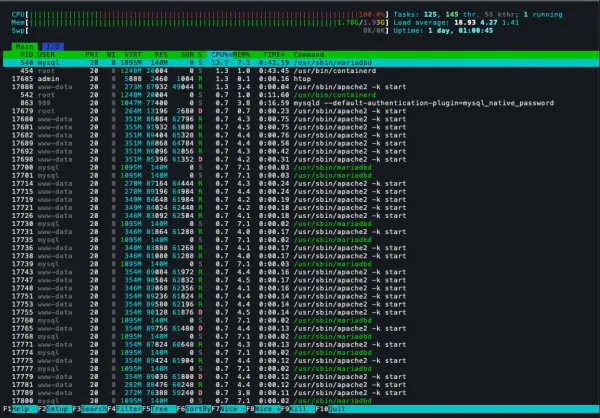

The main cause of crashes on unprotected website is a poorly handled memory by web services.

When you install web services on a low-cost server, each incoming request often spawns multiple processes or threads. Depending on the page accessed — such as in our WordPress setup — this can involve PHP and MariaDB. Under load, this quickly consumes available RAM, eventually exhausting system resources and causing the entire instance to crash.

To protect such poor configuration, you can of course increase available RAM on the host VPS instances, but as we can see, even a Large (8GB RAM) AWS EC2 is not able to properly handling a basic Nuclei scans without latencies for other users. 8GB RAM is normally more than enough to support a typical web service and should be able to handle a Nuclei scan, you just need to configure it properly.

To understand the issue, it’s important to know that traditional web servers like Apache were originally designed to handle a moderate number of simultaneous connections efficiently, but not the kind of high concurrency demanded by modern traffic or automated scanners.

In contrast, Reverse-Proxy like Nginx are built around an event-driven architecture, making it highly efficient at managing thousands of concurrent connections with minimal resource usage, unlike Apache, which spawns a process or thread per request. It also helps limit the number of requests before they reach high-RAM-consuming services.

Nginx works like a protective buffer: it absorbs, manages, and filters incoming web traffic — keeping Apache, PHP, and database from being overwhelmed, even on small VPS setups.

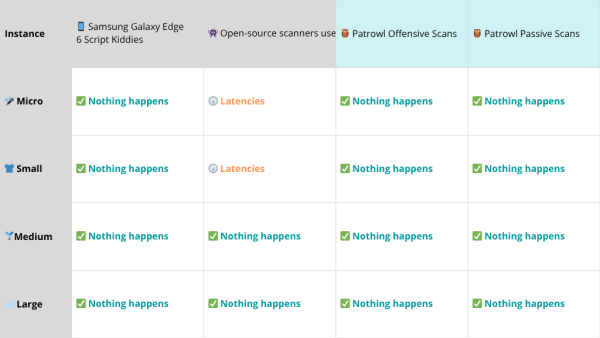

Then, we configured all our instances behind a very simple Nginx configuration, adding proxy_cache and a very basic limit_conn in front of all our Wordpress. This prevents a single IP (like Nuclei) from overwhelming the backend with too many concurrent connection :

proxy_cache_path /var/cache/nginx levels=1:2 keys_zone=CACHE:10m max_size=100m inactive=5m use_temp_path=off;

server {

listen 80;

server_name medium-aws-website.piedpeper.com;

location / {

proxy_pass http://127.0.0.1:8080; # Apache runs on port 8080

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

limit_conn conn_limit_per_ip 10;

}

}We then run exactly the same controls as before, and unsurprisingly, the results are way better regarding availability of our Wordpress during scans:

On very cheap instance, we could simply adjust the limit_connvalue. But it shows how easy you can protect a basic infrastructure from modern open-source scanner.

Now you know

Of course, the ecosystem surrounding exposed web applications is complex, and simplifying their overall protection to just installing a reverse proxy would be reductive. However, this article demonstrates how, with basic configurations and minimal expertise, you can effectively shield an application from the high volumes of traffic generated by modern open-source scanners.

For us, this is the minimum baseline needed to securely expose a web application online.

🦉 Patrowl ensures that all our scans follow strict volume limits, allowing us to deliver effective vulnerability testing with minimal impact on your infrastructure, keeping your applications safe and resilient under pressure.

Appendices

Go Further in Apache Optimization

Few steps here if you want to go further in your memory optimization ressources by an Apache using a Wordpress.

You can:

Choose MPM (Multi-processing Module) module of Apache instead of Prefork

Use PHP-FPM For handling PHP requests (https://hostman.com/tutorials/how-to-install-php-and-php-fpm-on-ubuntu-24-04/)

Limit Ressource Usage MaxConnectionsPerChild(or MaxRequestsPerChild)

Limit MaxRequestWorkers and ThreadsPerChild

Here is a very basic configuration example for a Small VPN instance (in apache2.conf or httpd.conf)

<IfModule mpm_event_module>

StartServers 1

MinSpareThreads 10

MaxSpareThreads 25

ThreadLimit 32

ThreadsPerChild 10

MaxRequestWorkers 50

MaxConnectionsPerChild 500

</IfModule>

KeepAlive On

MaxKeepAliveRequests 50

KeepAliveTimeout 1

ServerLimit 1async_flood.py

Script used to generate HTTP traffic from our Samsung Galaxy Edge 6:

zerolte:/ # cat /sdcard/async_flood.py

import asyncio

import aiohttp

import time

import random

import sys

if len(sys.argv) != 2:

print("Usage: python async_flood.py https://example.com")

sys.exit(1)

BASE_URL = sys.argv[1].rstrip('/')

ENDPOINTS = ["/", "/about", "/contact", "/blog", "/products", "/api/data", "/login", "/search?q=test"]

REQUESTS_PER_SECOND = 300

DURATION_SECONDS = 20

stats = {

"success": 0,

"errors": 0,

"response_times": [],

}

async def send_request(session, i):

url = BASE_URL + random.choice(ENDPOINTS)

start = time.perf_counter()

try:

async with session.get(url) as response:

await response.text()

duration = time.perf_counter() - start

stats["response_times"].append(duration)

if response.status == 200:

stats["success"] += 1

else:

stats["errors"] += 1

print(f"[{i}] {url} → {response.status} ({duration:.3f}s)")

except Exception as e:

stats["errors"] += 1

print(f"[{i}] {url} → ERROR : {e}")

async def main():

async with aiohttp.ClientSession() as session:

start_time = time.time()

total_requests = REQUESTS_PER_SECOND * DURATION_SECONDS

tasks = []

for i in range(total_requests):

elapsed = time.time() - start_time

expected = i / REQUESTS_PER_SECOND

delay = expected - elapsed

if delay > 0:

await asyncio.sleep(delay)

task = asyncio.create_task(send_request(session, i + 1))

tasks.append(task)

await asyncio.gather(*tasks)

print("\n=== CHECKED ===")

total = stats["success"] + stats["errors"]

avg_time = sum(stats["response_times"]) / len(stats["response_times"]) if stats["response_times"] else 0

print(f"Total requests : {total}")

print(f"Success : {stats['success']}")

print(f"Errors : {stats['errors']}")

print(f"Average Time : {avg_time:.3f} s")

if __name__ == "__main__":

asyncio.run(main()