Solution

Solution

-

-

Solution

-

Advanced External Attack Surface Management (EASM)

Discover and monitor all internet-facing assets.

-

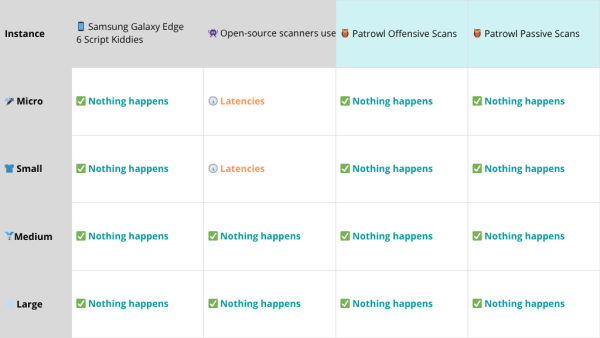

Continuous Automated Penetration Testing

Simulate real-world attacks to continuously test your defenses.

-

Continuous Threat Exposure Management (CTEM)

Continuously validate and prioritize real-world external exposures.

-

Pentest as a Service (PTaaS)

On-demand penetration testing combining automation and human expertise.

-

Dynamic Application Security Testing (DAST)

Test running web applications to detect exploitable vulnerabilities in real time.

-

External Vulnerability Scanner

Detect known vulnerabilities across infrastructure and applications.

-